4 Mixed Strategies in Strategic-Form Games

%if doesn’t work

%random variable %random vector or matrix%differentiated vector or matrix

%#TODO%#TODO

% Basic notation - general % Basic notation - general % Special symbols % Basic functions %image %cardinality % Operators - Analysis % Linear algebra % Linear algebra - Matrices % Statistics %at time % Useful commands % Game Theory %T-stage game %strategy in a repeated game %successor function %player function%action function

% Optimization$$

4.1 Mixed Strategies

As pointed out before, neither of the solution concepts discussed so far has to exist in pure strategies, e.g. Example 4.1.

Example 4.1 (Rock-Paper-Scissors) Consider a game

| \(R\) | \(P\) | \(S\) | |

|---|---|---|---|

| \(R\) | \((0,0)\) | \((-1,1)\) | \((1,-1)\) |

| \(P\) | \((1,-1)\) | \((0,0)\) | \((-1,1)\) |

| \(S\) | \((-1,1)\) | \((1,-1)\) | \((0,0)\) |

There are no strictly dominant pure strategies and no strategy is strictly dominated (IESDS removes nothing). Also, each strategy is a best response to some strategy of the opponent (rationalizability removes nothing). Finally, there are no pure Nash equilibria, as no pure strategy profile allows each player to play a best response to the strategy of the other player.

How to solve this? Let the players randomize their choice of pure strategies.

Definition 4.1 Let \(A\) be a finite set. A probability distribution over \(A\) is a function \(\sigma: A \to [0,1]\) such that \(\sum_{a \in A} \sigma(a) = 1\)

We denote by \(\Delta(A)\) the set of all probability distributions over \(A\).

Consider two strategies \(A_1, A_2\), then their respective probabilities are \(p\), \(1 - p\). For three strategies \(A_1, A_2, A_3\) they span a subspace of \(\mathbb{R}^3\) such that \(1 = \sigma(A_1) + \sigma(A_2) + \sigma(A_3)\) – this gives a part of the plane (simplex).

Example 4.2 Consider \(A = \left\{a,b,c\right\}\) and a function \(\sigma:A \to [0,1]\) such that \(\sigma(a) = 1/4, \sigma(b) = 3/4\) and \(\sigma(c) = 0\). Then \(\sigma\) in \(\Delta(A)\).

Let us fix a strategic-form game \(G = (N, (S_i)_{i \in N}, (u_i)_{i \in N})\).

From now on, assume two players and both \(S_i\) finite!

Definition 4.2 (Mixed Strategy) A mixed strategy of player \(i\) is a probability distribution \(\sigma\in \Delta(S_i)\) over \(S_i\). We denote by \(\Sigma_i\) = \(\Delta(S_i)\) the set of all mixed strategies of player \(i\). We define \(\Sigma = \Sigma_1 \times \Sigma_2\), the set of all mixed strategy profiles.

We identify each \(s_i \in S_i\) with a mixed strategy \(\sigma\) that assigns probability one to \(s_i\) (and zero to other pure strategies). Let \(\boldsymbol{\sigma}= (\sigma_1, \sigma_2)\) be a mixed strategy profile. Intuitively, we assume that each player \(i\) randomly selects his pure strategy according to \(\sigma_i\) and independently of his opponents. Thus for \(\boldsymbol{s} = (s_1, s_2) \in S = S_1 \times S_2\) we have that \[ \boldsymbol{\sigma}(\boldsymbol{s}) := \sigma_1(s_1) \cdot \sigma_2(s_2) \] is the probability that the players randomly select the pure strategy profile \(\boldsymbol{s}\) according to the mixed strategy profile \(\boldsymbol{\sigma}\).

We abuse notation a bit here: \(\boldsymbol{\sigma}\) denotes two things, a vector of mixed strategies as well as a probability distribution on \(S\).

Example 4.3 (Rock-Paper-Scissors) Consider a game of Rock-Paper-Scissors given by

| \(R\) | \(P\) | \(S\) | |

|---|---|---|---|

| \(R\) | \((0,0)\) | \((-1,1)\) | \((1,-1)\) |

| \(P\) | \((1,-1)\) | \((0,0)\) | \((-1,1)\) |

| \(S\) | \((-1,1)\) | \((1,-1)\) | \((0,0)\) |

As an example of mixed strategy \(\sigma_1\) consider \[ \sigma_1(R) = \frac 1 2, \quad \sigma_1(P) = \frac 1 3, \quad \sigma_1(S) = \frac 1 6, \] which we sometimes write as \((\frac 1 2(R), \frac 1 3(P), \frac 1 6(S))\), or only \((\frac 1 2, \frac 1 3, \frac 1 6)\) if the order of pure strategies is fixed. Now assume a mixed strategy profile \(\boldsymbol{\sigma}= (\sigma_1, \sigma_2)\) where \[ \sigma_1 = \left( \frac 1 2(R), \frac 1 3(P), \frac 1 6(S) \right), \quad \sigma_2 = \left( \frac 1 3(R), \frac 2 3(P), 0(S) \right). \]

Then the probability \(\boldsymbol{\sigma}(R,P)\) that the pure strategy profile \((R,P)\) will be played by players playing the mixed profile \((\sigma_1, \sigma_2)\) is \[ \sigma_1(R) \cdot \sigma_2(P) = \frac 1 2 \cdot \frac 2 3 = \frac 1 3 \]

But now what is the suitable notion of payoff?

Definition 4.3 (Expected Payoff) The expected payoff of player \(i\) under a mixed strategy profile \(\boldsymbol{\sigma}\in \Sigma\) is \[ u_i(\boldsymbol{\sigma}) := \sum_{\boldsymbol{s} \in S} \boldsymbol{\sigma}(\boldsymbol{s}) u_i(\boldsymbol{s}) = \sum_{s_1 \in S_1} \sum_{s_2 \in S_2} \sigma_1(s_1) \sigma_2(s_2) u_i(s_1, s_2), \]

i.e. it is the “weighted average” of what player \(i\) wins under each pure strategy profile \(\boldsymbol{s}\), weighted by the probability of that profile.

Proposition 4.1 Every rational player strives to maximize his own expected payoff.

This assumption is not always completely convincing… Not everyone must be fond of lottery (and still be rational).

Example 4.4 (Matching Pennies) Consider a game Matching Pennies given by

| \(H\) | \(T\) | |

|---|---|---|

| \(H\) | \((1,-1)\) | \((-1,1)\) |

| \(T\) | \((-1,1)\) | \((1,-1)\) |

Each player secretly turns a penny into heads or tails, and then they reveal their choices simultaneously. If the pennies match, player 1 (row) wins, if they do not match, player 2 (column) wins. Now, consider \(\sigma_1 = (1/3(H), 2/3(T))\) and \(\sigma_2 = (1/4(H), 3/4(T))\), then \[ \begin{gathered} u_1(\sigma) = \sum_{(X,Y) \in \left\{H,T\right\}^2} \sigma_1(X) \sigma_2(Y)u_1(X,Y) = \dots = \frac 1 6 \\ u_2(\sigma) = \sum_{(X,Y) \in \left\{H,T\right\}^2} \sigma_1(X) \sigma_2(Y)u_2(X,Y) = \dots = - \frac 1 6 \end{gathered} \]

4.2 Solution Concepts

We revisit the following solution concepts in mixed strategies:

- strictly dominant strategy equilibrium,

- IESDS equilibria,

- rationalizable equilibria,

- Nash equilibria;

From now on, when we say a strategy we implicitly mean a mixed strategy.

In order to deal with efficiency issues we assume that the size of the game \(G\) is defined by \(|G| := |N| + \sum_{i \in N} |S_i| + \sum_{i \in N} |u_i|\) where \(|u_i| = \sum_{\boldsymbol{s} \in S} |u_i(\boldsymbol{s})|\) and \(|u_i(\boldsymbol{s})|\) is the length of a binary encoding of \(u_i(\boldsymbol{s})\) (we assume that rational numbers are encoded as quotients of two binary integers). Note that, in particular, \(|G| > |S|\). This will be later needed for the complexity of certain algorithms in relation to game size.

Definition 4.4 Let \(\sigma_1, \sigma_1' \in \Sigma_1\) be (mixed) strategies of player 1. Then \(\sigma_1'\) is strictly dominated by \(\sigma_1\) (write \(\sigma_1' \prec\sigma_1\)) if \[ u_1(\sigma_1, s_2) > u_1(\sigma_1', s_2) \] for all \(s_2 \in S_2\). Symmetrically for player 2.

The above condition is equivalent to \[ u_1(\sigma_1, \sigma_2) > u_1(\sigma_1', \sigma_2) \] for all \(\sigma_2 \in \Sigma_2\).

Example 4.5 Consider a game

| \(X\) | \(Y\) | |

|---|---|---|

| \(A\) | \(3\) | \(0\) |

| \(B\) | \(0\) | \(3\) |

| \(C\) | \(1\) | \(1\) |

Is there a strictly dominated strategy? Here \(\boldsymbol{\sigma}= (1/2, 1/2, 0)\) dominates \((0,0,1)\), aka the pure \(C\), strategy. Then expected payoffs for strategies of this game can be plotted as seen in Figure 4.1.

Question: Is there a game with at least one strictly dominated strategy but without strictly dominated pure strategies? Yes, consider the following game, where \(C\) strictly dominates the mixed strategy \((1/2, 1/2, 0)\):

Definition 4.5 A mixed strategy \(\sigma_i \in \Sigma_i\) is strictly dominant if every other mixed strategy of player \(i\) is strictly dominated by \(\sigma_i\).

Definition 4.6 A strategy profile \(\boldsymbol{\sigma}\in \Sigma\) is a strictly dominant strategy equilibrium if \(\sigma_i \in \Sigma_i\) is strictly dominant for all \(i \in N\).

Proposition 4.2 If the strictly dominant strategy equilibrium exists, it is unique, all its strategies are pure, and rational players will play it.

Proof. Let \(\boldsymbol{\sigma}^* = (\sigma^*_1, \sigma^*_2) \in \Sigma\) be a strictly dominant strategy equilibrium. First, without loss of generality, let us assume that for player 1 there exists a non-empty non-singular subset of his pure strategies \(S'_1 \subseteq S_1\) such that \(\sigma_1^* (s_{1,j}) > 0\) for all \(j \in \left\{1, \dots, |S_1'|\right\}\) with \(s_{1,j} \in S_1'\), i.e. \(|\mathrm{supp}\left( \sigma_1^* \right)| = |S_1'| > 1\). Then per Definition 4.3, we get \[\begin{align*} u_1(\sigma_1^*, \sigma_2^*) &= \sum_{s_{1,j} \in S_1'}\sum_{s_2 \in S_2} \sigma_1^*(s_{1,j}) \sigma_2^* (s_2) u_1(s_{1,j}, s_2) \\ &= \sum_{s_{1,j} \in S_1'} \sigma_1^*(s_{1,j}) \underbrace{\sum_{s_2 \in S_2} \sigma_2^*(s_2) u_1(s_{1,j}, s_2)}_{U_1(s_{1,j}; \sigma^*_2)} \\ &= \sum_{s_{1,j} \in S_1'} \sigma_1^*(s_{1,j}) U_1(s_{1,j}; \sigma^*_2) \end{align*}\]

Because \(\boldsymbol{\sigma}^*\) is a strictly dominant strategy equilibrium, then per Definition 4.6, it must hold that \(U_1(s_{1,j}; \sigma^*_2) \neq U_1(s_{1,k}; \sigma^*_2)\) for \(j \neq k\), otherwise one could arbitrarily distribute probabilities of \(\sigma^*_1\) between \(s_{1,j}\) and \(s_{1,k}\) without changing the payoff for player 1, which is a contradiction. Hence there exists \(J \in \left\{1, \dots, |S_1'|\right\}\) such \(U_1(s_{1,J}; \sigma^*_2) > U_1(s_{1,j}; \sigma^*_2)\) for every \(j \in \left\{1, \dots, |S_1'|\right\}\) with \(j \neq J\). But then by choosing \(\sigma_1'(s_{1, J}) = 1\) and \(\sigma_1'(s_1) = 0\) for every \(s_1 \in S_1\) such that \(s_1 \neq s_{1,J}\) (i.e. playing the pure strategy \(s_{1,J}\)), we get \[ u_1(\sigma_1', \sigma_2^*) = U_1(s_{1,J}; \sigma^*_2) > \underbrace{\sum_{s_{1,j} \in S_1'} \sigma_1^*(s_{1,j})}_{= 1} \overbrace{U_1(s_{1,j}; \sigma^*_2)}^{< U_1(s_{1,J}; \sigma^*_2)} = u_1(\sigma_1^*, \sigma_2^*), \] which is a contradiction with the fact that \(\boldsymbol{\sigma}^*\) is a strictly dominant strategy equilibrium. Thus any strictly dominant strategy equilibrium is comprised of only pure strategies. Then by Corollary 3.1, we obtain that \(\boldsymbol{\sigma}^*\) is unique and that rational players will play it, which concludes the proof.

Thus, to compute the strictly dominant strategy equilibrium, it is sufficient to consider only pure strategies.

Definition 4.7 (IESDS in Mixed Strategies) Define a sequence \(D_i^0, D_i^1, D_i^2, \dots\) of strategy sets of player \(i\). Also denote by \(G_{DS}^{k}\) the game obtained from \(G\) by restricting to \(D_i^k\), \(i \in N\). We shall call the following algorithm “Iterated Elimination of Strictly Dominated Strategies”:

- Initialize \(k = 0\) and \(D_i^0 = S_i\) for each \(i \in N\).

- For all players \(i \in N\): Let \(D^{k+1}_i\) be the set of all pure strategies of \(D_i^k\) that are not strictly dominated in \(G_{DS}^{k}\) by mixed strategies.

- If \(D^{k+1}_i = D^{k}_i\) for all players \(i \in N\), then stop. Otherwise, let \(k := k+1\) and go to 2.

We say that \(s_i \in S_i\) survives IESDS if \(s_i \in D_i^k\) for all \(k = 0, 1, 2,\dots\) (or until stop).

Definition 4.8 A strategy profile \(\boldsymbol{s} = (s_1, s_2) \in S\) is an IESDS equilibrium if both \(s_1\) and \(s_2\) survive IESDS.

Each \(D^{k+1}_i\) can be computed in polynomial time using linear programming.

Example 4.6 Consider a game

| \(X\) | \(Y\) | |

|---|---|---|

| \(A\) | \(3\) | \(0\) |

| \(B\) | \(0\) | \(3\) |

| \(C\) | \(1\) | \(1\) |

Let us have a look at the first iteration of IESDS. Observe that \(A,B\) are not strictly dominated by any mixed strategy. Let us construct a set of constraints on mixed strategies (possibly) strictly dominating \(C\):

\[ \begin{aligned} 3x_A + 0 x_B + x_C > 1 \\ 0x_A + 3 x_B + x_C > 1 \\ x_A, x_B, x_C \geq 0 \\ x_A + x_B + x_C = 1 \end{aligned} \]

4.3 Linear Programming

Linear programming is a technique for the optimization of a linear objective function, subject to linear (non-strict) inequality constraints. Formally, a linear program in so-called canonical form looks like this: \[\begin{align*} \max &\sum_{j = 1}^m c_j x_j && \\ \text{s.t.}\;&\sum_{j = 1}^m a_{ij} x_j \leq b_i && 1 \leq i \leq n \\ & x_j \geq 0 && 1 \leq j \leq m \end{align*}\]

Here \(a_{ij}, b_k\) and \(c_j\) are real numbers and \(x_j\)’s are real variables. A feasible solution is an assignment of real numbers to the variables \(x_j, 1 \leq j \leq m\), so that the constraints are satisfied. An optimal solution is a feasible solution that maximizes the objective function \(\sum_{j = 1}^m c_j x_j\). We assume that coefficients \(a_{ij}, b_k\) and \(c_j\) are encoded in binary (more precisely, as fractions of two integers encoded in binary).

Theorem 4.1 (Khachiyan, Doklady Akademii Nauk SSSR, 1979) There is an algorithm that for any linear program computes an optimal solution in polynomial time.

The algorithm uses the so-called ellipsoid method. In practice, the Khachiyan’s is not used. Usually simplex algorithm is used even though its theoretical complexity is exponential. There is also a polynomial time algorithm (by Karmarkar) which has better complexity upper bounds than the Khachiyan’s and sometimes works even better than the simplex. There exist several advanced linear programming solvers (usually parts of larger optimization packages) implementing various heuristics for solving large-scale problems, sensitivity analysis, etc.

Example 4.7 (continued Example 4.6) The linear program for deciding whether \(C\) is strictly dominated: The program maximizes \(y\) under the following constraints:

\[ \begin{aligned} 3x_A + 0 x_B + x_C \geq 1 + y \\ 0x_A + 3 x_B + x_C \geq 1 + y \\ x_A, x_B, x_C \geq 0 \\ x_A + x_B + x_C = 1 \\ y \geq 0 \end{aligned} \]

Here \(y\) just implements the strict inequality using \(\geq\), we look for a solution with \(y > 0\). The maximum \(y = \frac 1 2\) is attained at \(x_A = \frac 1 2\) and \(x_B = \frac 1 2\). Note that in step 2 of Definition 4.7, it is not sufficient to consider domination by only pure strategies. Consider the following zero-sum game

| \(X\) | \(Y\) | |

|---|---|---|

| \(A\) | \(3\) | \(0\) |

| \(B\) | \(0\) | \(3\) |

| \(C\) | \(1\) | \(1\) |

Here \(C\) is strictly dominated by \((\sigma_1(A), \sigma_1(B), \sigma_1(C)) = \left( \frac 1 2, \frac 1 2, 0 \right)\), but no strategy is strictly dominated in pure strategies.

4.4 Best Response in Mixed Strategies

Definition 4.9 A (mixed) belief of player 1 is a mixed strategy \(\sigma_2\) of player 2 (and vice versa).

Definition 4.10 A mixed strategy \(\sigma_1 \in \Sigma_1\) is a best response to a belief \(\sigma_2 \in \Sigma_2\) if \[ u_1(\sigma_1, \sigma_2) \geq u_1(s_1, \sigma_2) \] for all \(s_1 \in S_1\). Denote by \(\mathrm{BR}_{1}\left( \sigma_2 \right)\) the set of all best responses of player 1. Symmetrically for player 2.

The above condition is equivalent to \[ u_1(\sigma_1, \sigma_2) \geq u_1(\sigma_1', \sigma_2) \] for all \(\sigma_1' \in \Sigma_1\).

Example 4.8 Consider a game with the following payoffs for player 1:

| \(X\) | \(Y\) | |

|---|---|---|

| \(A\) | \(2\) | \(0\) |

| \(B\) | \(0\) | \(2\) |

| \(C\) | \(1\) | \(1\) |

- Player 1 (row) plays \(\sigma_1 = (a(A), b(B), c(C))\).

- Player 2 (column) plays \((q(X), (1 − q)(Y))\) (we write just \(q\)).

Because \[ \begin{gathered} u_1(A, q) = 2q \\ u_1(B, q) = 2 (1 - q)\\ u_1(C, q) = 1 \end{gathered} \] then \[ \mathrm{BR}_{1}\left( q \right) = \begin{cases} A, & q > \frac 1 2, \\ B, & q < \frac 1 2, \\ \left\langle (A,B,C), \boldsymbol{c} \right\rangle, \; \sum \boldsymbol{c} = 1, & q = \frac 1 2. \end{cases} \]

For \(\sigma_1\) such that \(\sigma_1(A), \sigma_1(B) > 0\) and \[ u_1(A, q) < u_1(B, q), \] then \(\bar \sigma_1\) such that \[ \begin{gathered} \bar \sigma_1(A) = 0, \\ \bar \sigma_1(B) = \sigma_1(A) + \sigma_1(B) \end{gathered} \] is a better response.

4.5 Rationalizability in Mixed Strategies (Two Players)

We will begin with an assumption: A rational player 1 with a belief \(\sigma_2\) always plays a best response to \(\sigma_2\) (the same for player 2).

Definition 4.11 A pure strategy \(s_1 \in S_1\) of player 1 is never best response if it is not a best response to any belief \(\sigma_2\) (similarly for player 2).

No rational player plays a strategy that is never best response.

Definition 4.12 (Rationalizable) Define a sequence \(R_i^0, R_i^1, \dots\) of strategy sets of player \(i\). Also, denote by \(G_{Rat}^{k}\) the game obtained from \(G\) by restricting to \(R_i^k\) for \(i \in N\). Consider the following algorithm

- Initialize \(k = 0\) and \(R_i^0 = S_i\) for each \(i \in N\).

- For all players \(i \in N\): Let \(R_i^{k+1}\) be the set of all strategies of \(R_i^k\) that are best responses to some (mixed) beliefs in \(G_{Rat}^{k}\).

- Let \(k := k+1\) and go to 2.

We say that \(s_i \in S_i\) is rationalizable if \(s_i \in R_i^k\) for all \(k = 0, 1, 2, \dots\) (or until stop).

Definition 4.13 A strategy profile \(\boldsymbol{s} = (s_1, s_2) \in S\) is a rationalizable equilibrium if both \(s_1\) and \(s_2\) are rationalizable.

Example 4.9 Consider again a game \(G\) given by

| \(X\) | \(Y\) | |

|---|---|---|

| \(A\) | \(3\) | \(0\) |

| \(B\) | \(0\) | \(3\) |

| \(C\) | \(1\) | \(1\) |

What pure strategies of player 1 are strictly dominated? In pure strategies, \(C\) is not strictly dominated, but it is never best response. In mixed strategies, the strategy \(\left( \frac 1 2(A), \frac 1 2 (B), 0(C) \right)\) strictly dominates \(C\). What pure strategies of player 1 are never best responses? We can make the following observation: The set of strictly dominated pure strategies coincides with the set of pure never best responses! … and this holds in general for two-player games:

Theorem 4.2 A pure strategy \(s_1\) of player 1 is never best response to any belief \(\sigma_2\) if and only if \(s_1\) is strictly dominated by a strategy \(\sigma_1 \in \Sigma_1\) (similarly for player 2).

Corollary 4.1 It follows that a strategy of \(S_i\) survives IESDS \(\iff\) it is rationalizable.

As opposed to pure strategies, the IESDS and rationalizability coincide in mixed strategies.

4.6 Mixed Nash Equilibria

Definition 4.14 A mixed-strategy profile \(\boldsymbol{\sigma}^* = (\sigma_1^*, \sigma_2^*) \in \Sigma\) is a (mixed) Nash equilibrium if \(\sigma_1^*\) is a best response to \(\sigma_2^*\) and \(\sigma_2^*\) is a best response to \(\sigma_1^*\). That is \[ \begin{gathered} u_1(\sigma_1^*, \sigma_2^*) \geq u_1(s_1, \sigma_2^*) \quad \forall s_1 \in S_1, \\ u_2(\sigma_1^*, \sigma_2^*) \geq u_2(\sigma_1^*, s_2) \quad \forall s_2 \in S_2. \end{gathered} \]

Theorem 4.3 (Nash) Every finite game in strategic form has a Nash equilibrium.

Example 4.10 Consider a game given by

| \(H\) | \(T\) | |

|---|---|---|

| \(H\) | \((1,-1)\) | \((-1,1)\) |

| \(T\) | \((-1,1)\) | \((1,-1)\) |

Player 1 (row) plays \((p(H), (1 − p)(T))\) (we write just \(p\)) and player 2 (column) plays \((q(H), (1 − q)(T))\) (we write \(q\)). Compute all Nash equilibria. What are the expected payoffs of playing pure strategies for player 1? From \[ u_1(H,q) = 2q-1 \qquad \land \qquad u_1(T,q) = 1 - 2q, \] we get \[ u_1(p,q) = pu_1(H,q) + (1-p)u_1(T,q)=p(2q-1) + (1-p)(1-2q). \]

We can obtain the best response correspondence \(\mathrm{BR}_{1}\left( q \right)\) \[ \mathrm{BR}_{1}\left( q \right) = \begin{cases} T, & q < \frac 1 2, \\ p \in [0,1], & q=\frac 1 2, \\ H, & q > \frac 1 2 \end{cases} \] and we can repeat the same process for player 2. The only situation where they both play their best response to each other – the intersection of \(\mathrm{BR}_{1}\left( q \right)\) and \(\mathrm{BR}_{2}\left( p \right)\) – is the only Nash equilibrium \(\boldsymbol{\sigma}= (\sigma_1, \sigma_2) = (\frac 1 2, \frac 1 2)\).

4.6.1 Properties of Mixed Nash Equilibria

Lemma 4.1 Every Nash equilibrium \(\boldsymbol{\sigma}^* = (\sigma_1^*, \sigma_2^*) \in \Sigma\) satisfies

- \(u_1(s_1, \sigma_2^*) = u_1(\boldsymbol{\sigma}^*)\) for \(s_1 \in \mathrm{supp}\left( \sigma_1^* \right)\);

- \(u_2(\sigma_1^*, s_2) = u_1(\boldsymbol{\sigma}^*)\) for \(s_2 \in \mathrm{supp}\left( \sigma_2^* \right)\).

Proof. Let’s now prove Lemma 4.1. Without loss of generality, consider only player 1 and assume that \(\boldsymbol{\sigma}^*\) is a Nash equilibrium. The latter assumption implies \(u_1(s_1, \sigma^*_2) \leq u_1(\boldsymbol{\sigma}^*)\) for all \(s_1 \in S_1\). Now, if there exists \(s_1' \in \mathrm{supp}\left( \sigma_1^* \right) \subseteq S_1\) satisfying \(u_1(s_1', \sigma_2^*) < u_1(\boldsymbol{\sigma}^*)\), then because \(\sigma^*(s_1') > 0\) we have \[ \begin{aligned} u_1(\boldsymbol{\sigma}^*) &= \sum_{s_1 \in S_1} \sigma^*_1(s_1)u_1(s_1, \sigma_2^*) \\ &= \sigma_1^*(s_1') \underbrace{u_1(s_1', \sigma_2^*)}_{< u_1(\boldsymbol{\sigma}^*)} + \sum_{s_1 \in S_1 \setminus \left\{s_1'\right\}} \sigma_1^*(s_1) \underbrace{u_1(s_1, \sigma_1^*)}_{\leq u_1(\boldsymbol{\sigma}^*)} \\ &< \sigma_1^*(s_1') u_1(\boldsymbol{\sigma}^*) + \sum_{s_1 \in S_1 \setminus \left\{s_1'\right\}} \sigma^*_1(s_1) u_1(\boldsymbol{\sigma}^*) \\ &= \sum_{s_1 \in S_1} \sigma_1^*(s_1) u_1(\boldsymbol{\sigma}^*) = u_1(\boldsymbol{\sigma}^*), \end{aligned} \] which is a contradiction. Note that we could only sum over the \(\mathrm{supp}\left( \sigma_1^* \right) \subseteq S_1\). Thus \(u_1(s_1, \sigma_2^*) = u_1(\boldsymbol{\sigma}^*)\) for all \(s_1 \in S_1\).

Intuitively, not playing simply a pure strategy can give you a better utility. But if it were to give you less, it would drag the whole averaged utility of the equilibrium down, which would be a contradiction with the initial utility value of the equilibrium.

Example 4.11 Consider again a game given by

| \(H\) | \(T\) | |

|---|---|---|

| \(H\) | \((1,-1)\) | \((-1,1)\) |

| \(T\) | \((-1,1)\) | \((1,-1)\) |

Player 1 (row) plays \((p(H), (1 − p)(T))\) (we write just \(p\)) and player 2 (column) plays \((q(H), (1 − q)(T))\) (we write \(q\)). Compute all Nash equilibria.

Firstly, there are no pure strategy equilibria. Also, there are no equilibria where only player 1 randomizes (in other words, e.g. \(|\mathrm{supp}\left( \sigma_1^* \right)| = 1\) and \(|\mathrm{supp}\left( \sigma_2^* \right)| = 2\)): Indeed, assume that \((p, H)\) is such an equilibrium. Then by Lemma 4.1 \[ 1 = u_1(H,H) = u_1(T,H) = -1 \] is a contradiction. Also, \((p,T)\) cannot be an equilibrium. Assume now both players randomize, so \(p,q \in (0,1)\). The expected payoffs of playing pure strategies for player 1: \[ u_1(H,q) = 2q-1 \quad \land \quad u_1(T,q) = 1 - 2q \] and similarly \[ u_2(p, H) = 1-2p \quad \land \quad u_2(p, T) = 2p - 1. \] Again, by Lemma 4.1, such Nash equilibria must satisfy \[ 2q-1 = 1-2q \quad \land \quad 2p - 1 = 1 -2p, \] which are linear equations and from them we get \(p = q = \frac 1 2\) – the only Nash equilibrium.

In other words, we try to remove the element of randomness as much as we can by considering Lemma 4.1 and pure strategies.

Example 4.12 Consider the Battle of Sexes game given by the following table:

| \(O\) | \(F\) | |

|---|---|---|

| \(O\) | \((2,1)\) | \((0,0)\) |

| \(F\) | \((0,0)\) | \((1,2)\) |

Clearly, there are two pure strategy equilibria \((O,O)\) and \((F,F)\), and no Nash equilibrium where only one player randomizes. Now assume that

- player 1 (row) plays \((p(O), (1-p)F)\) (we write just \(p\)) and

- player 2 (column) plays \((q(O), (1-q)F)\) (we write just \(q\)),

where \(p,q \in (0,1)\). By Lemma 4.1, such Nash equilibria must satisfy \[ 2q = 1 - q \quad \land \quad p = 2(1 - p), \] which holds only for \(q = \frac 1 3\) and \(p = \frac 2 3\).

What did we do in these examples (see Example 4.11 and Example 4.12)? We went through all support combinations for both players.

(pure, one player mixing, both mixing)

For each pair of support sets we tried to find equilibria in strategies with these supports.

(in Battle of Sexes: two pure, no equilibrium with just one player mixing, one equilibrium when both mixing)

Whenever one of the supports was non-singleton, we reduced the computation of Nash equilibria to linear equations.

Lemma 4.2 Let \(\boldsymbol{\sigma}^* = (\sigma_1^*, \sigma_2^*) \in \Sigma\) be a mixed profile. Assume there exists \(w_1, w_2 \in \mathbb{R}\) such that

- \(u_1(s_1, \sigma_2^*) = w_1\) for \(s_1 \in \mathrm{supp}\left( \sigma_1^* \right)\),

- \(u_1(s_1, \sigma_2^*) \leq w_1\) for \(s_1 \notin \mathrm{supp}\left( \sigma_1^* \right)\),

- \(u_2(\sigma_2^*, s_2) = w_2\) for \(s_2 \in \mathrm{supp}\left( \sigma_2^* \right)\),

- \(u_2(\sigma_2^*, s_2) \leq w_2\) for \(s_2 \notin \mathrm{supp}\left( \sigma_2^* \right)\).

Then \(u_1(\boldsymbol{\sigma}^*) = w_1\) and \(u_2(\boldsymbol{\sigma}^*) = w_2\), and \(\boldsymbol{\sigma}^*\) is a Nash equilibrium.

Proof. Consider just the player 1 (for player 2 similarly): \[ \begin{aligned} u_1(\boldsymbol{\sigma}^*) &= \sum_{s_1 \in S_1} \sigma_1^*(s_1) u_1(s_1, \sigma^*_2) \\ &= \sum_{s_1 \in \mathrm{supp}\left( \sigma_1^* \right)} \sigma_1^*(s_1) u_1(s_1, \sigma_2^*) \\ &= \sum_{s_1 \in \mathrm{supp}\left( \sigma_1^* \right)} \sigma_1^*(s_1)w_1 \\ &= w_1 \sum_{s_1 \in \mathrm{supp}\left( \sigma_1^* \right)} \sigma_1^*(s_1) = w_1. \end{aligned} \]

Now the fact that \(\boldsymbol{\sigma}^*\) is a Nash equilibrium follows from the definition.

4.7 Computing Nash Equilibria

Every Nash equilibrium \(\boldsymbol{\sigma}^*\) can be computed by finding appropriate \(w_1, w_2\) so that

- \(u_1(s_1, \sigma_2^*) = w_1\) for \(s_1 \in \mathrm{supp}\left( \sigma_1^* \right)\),

- \(u_1(s_1, \sigma_2^*) \leq w_1\) for \(s_1 \notin \mathrm{supp}\left( \sigma_1^* \right)\),

- \(u_2(\sigma_2^*, s_2) = w_2\) for \(s_2 \in \mathrm{supp}\left( \sigma_2^* \right)\),

- \(u_2(\sigma_2^*, s_2) \leq w_2\) for \(s_2 \notin \mathrm{supp}\left( \sigma_2^* \right)\).

Indeed,

- by Lemma 4.2, all \(\boldsymbol{\sigma}^*\) and \(w_1, w_2\) satisfying the above inequalities give a Nash equilibrium \(\boldsymbol{\sigma}^*\) with \(u_1(\boldsymbol{\sigma}^*) = w_1\) and \(u_2(\boldsymbol{\sigma}^*) = w_2\),

- by Lemma 4.1, for every Nash equilibrium \(\boldsymbol{\sigma}^*\) choosing \(w_1 = u_1(\boldsymbol{\sigma}^*)\) and \(w_2 = u_2(\boldsymbol{\sigma}^*)\) satisfies the above inequalities.

Suppose that we somehow know the supports \(\mathrm{supp}\left( \sigma_1^* \right), \mathrm{supp}\left( \sigma_2^* \right)\) for some Nash equilibrium \(\boldsymbol{\sigma}^* = (\sigma_1^*, \sigma_2^*)\) (which itself is unknown to us). We may consider all \(\sigma^*_i(s_i)\)’s and both \(w_1, w_2\)’s as variables and use the above conditions to design a system of inequalities capturing Nash equilibria with the given support sets \(\mathrm{supp}\left( \sigma_1^* \right), \mathrm{supp}\left( \sigma_2^* \right)\).

4.7.1 Support Enumeration

Definition 4.15 (Nash equilibrium given the support sets) To simplify notation, assume that for \(i\) we have \(S_i = \left\{1, \dots, m_i\right\}\). Then \(\sigma_i(j)\) is the probability of the pure strategy \(j\) in the mixed strategy \(\sigma_i\). Now fix supports \(\mathrm{supp}_i \subseteq S_i\) for every \(i \in \left\{1,2\right\}\) and consider the following system of constraints with variables \(\sigma_1(1), \dots, \sigma_1(m_1), \sigma_2(1), \dots, \sigma_2(m_2), w_1, w_2\):

- For all \(k \in \mathrm{supp}_1\) and \(l \in \mathrm{supp}_2\): \[ u_1(k, \sigma_2) = \sum_{l' \in S_2} \sigma_2(l') u_1(k, l') = w_1, \quad \sum_{k' \in S_1} \sigma_1(k') u_2(k', l) = w_2. \]

- For all \(k \notin \mathrm{supp}_1\) and \(l \notin \mathrm{supp}_2\): \[ \sum_{l' \in S_2} \sigma_2(l') u_1(k, l') \leq w_1, \quad \sum_{k' \in S_1} \sigma_1(k') u_2(k', l) \leq w_2. \]

- For all \(i \in \left\{1,2\right\}\): \(\sigma_i(1) + \dots + \sigma_i(m_i) = 1\).

- For all \(i \in \left\{1,2\right\}\) and all \(k \in \mathrm{supp}_i\): \(\sigma_i(k) \geq 0\).

Technically, there should be a strict inequality, but then it wouldn’t be a linear program anymore. What’s more, it can be shown that this relaxation gives us the same solutions.

- For all \(i \in \left\{1,2\right\}\) and all \(k \notin \mathrm{supp}_i\): \(\sigma_i(k) = 0\).

Therefore we can see that the constraints are linear for the two players. So the question now is how to find the \(\mathrm{supp}_1\) and \(\mathrm{supp}_2\)… And we can simply guess!

Definition 4.16 (Algorithm for finding a Nash equilibrium) Consider a two-player strategic game \(G\) with strategy sets \(S_1 = \left\{1, \dots, m_1\right\}\) and \(S_2 = \left\{1, \dots, m_2\right\}\) and rational payoffs \(u_1, u_2\) as an input. The output of this algorithm is a Nash equilibrium \(\boldsymbol{\sigma}^*\).

For all possible \(\mathrm{supp}_1 \subseteq S_1\) and \(\mathrm{supp}_2 \subseteq S_2\):

- Check if the corresponding system of linear constraints from Definition 4.15 has a feasible solution \(\boldsymbol{\sigma}^*, w_1^*, w_2^*\)

- If so, STOP: the feasible solution \(\boldsymbol{\sigma}^*\) is a Nash equilibrium satisfying \(u_i(\boldsymbol{\sigma}^*) = w_i^*\)

Unfortunately, there are \(2^{(m_1 + m_2)}\) possible subsets \(\mathrm{supp}_1, \mathrm{supp}_2\) and as such, this algorithm requires worst-case exponential time. What’s more, we can formulate the following remarks:

The algorithm in Definition 4.16 combined with Theorem 4.3 and properties of linear programming imply that every finite two-player game has a rational Nash equilibrium (furthermore, the rational numbers have polynomial representation in binary).

The algorithm can be used to compute all Nash equilibria.

There are algorithms for computing (a finite representation of) a set of all feasible solutions of a given linear constraint system.

The algorithm can be used to compute “good” equilibria.

For example, to find a Nash equilibrium maximizing the sum of all expected payoffs (the “social welfare”) it suffices to solve the system of constraints while maximizing \(w_1 + w_2\). More precisely, the algorithm can be modified as follows:

- Initialize \(W := -\infty\) (\(W\) stores the current maximum welfare)

- For all possible \(\mathrm{supp}_1 \subseteq S_1\) and \(\mathrm{supp}_2 \subseteq S_2\):

- Find the maximum value \(\max (w_1 + w_2)\) of \(w_1 + w_2\) so that constraints are satisfiable (using linear programming)

- Put \(W:= \max\left\{W, \max(w_1 + w_2)\right\}\)

- Return \(W\).

4.8 Complexity results

Theorem 4.4 Given a two-player game in strategic form, a mixed Nash equilibrium can be computed in exponential time.

Theorem 4.5 All the following problems are NP-complete: Given a two-player game in strategic form, does it have:

- a Nash equilibrium in which player 1 has utility at least a given amount \(v\)?

- a Nash equilibrium in which the sum of expected payoffs of the two players is at least a given amount \(v\)?

- a Nash equilibrium with a support of size greater than a given number?

- a Nash equilibrium whose support contains a given strategy \(s\)?

- a Nash equilibrium whose support does not contain a given strategy \(s\)?

- …

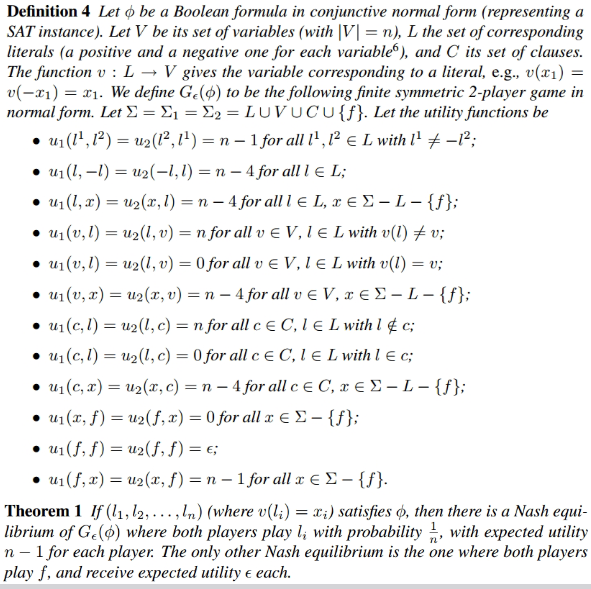

What’s more, NP-hardness can be proved using reduction from SAT, see Figure 4.2.

But the real question is what is the exact complexity of computing Nash equilibria in two-player games?

Let us concentrate on the problem of computing one Nash equilibrium (sometimes called the sample equilibrium problem). As the class NP consists of decision problems, it cannot be directly used to characterize the complexity of the sample equilibrium problem. We use complexity classes of function problems such as FP, FNP, etc. The sample equilibrium problem belongs to the complexity class PPAD (which is a subclass of TFNP) for two-player games.

A binary relation \(P(x,y)\) is in TFNP if and only if there is a deterministic polynomial time algorithm that can determine whether \(P(x,y)\) holds given both \(x\) and \(y\), and for every \(x\), there exists a \(y\) which is at most polynomially longer than \(x\) such that \(P(x,y)\) holds.

NP complexity class can be embedded into FNP by considering characteristic functions of the languages.

Can we do better than FNP (i.e. exponential time)? In what follows we show that the sample equilibrium problem can be solved in polynomial time for zero-sum two-player games.

4.8.1 Zero-sum Games

Definition 4.17 A mixed strategy \(\sigma^*_1 \in \Sigma_1\) is a maxmin strategy of player 1 if \[ \sigma^*_1 \in \mathop{\mathrm{argmax}}_{\sigma_1 \in \Sigma_1} \min_{s_2 \in S_2} u_1(\sigma_1, s_2), \tag{4.1}\]

where \(s_2 \in S_2\) can be swapped for \(\sigma_2 \in \Sigma_2\) (and there can be more than one maxmin strategy).

Intuitively, a maxmin strategy \(\sigma^*_1\) maximizes player 1’s worst-case payoff in the situation where player 2 strives to cause the greatest harm to player 1 and knows what strategy player 1 will play.

Similarly, \(\sigma^*_2 \in \Sigma_2\) is a maxmin strategy of player 2 if \[ \sigma^*_2 \in \mathop{\mathrm{argmax}}_{\sigma_2 \in \Sigma_2} \min_{s_1 \in S_1} u_2(s_1, \sigma_2). \]

Assuming a zero-sum game, i.e. \(u_1 = - u_2\), this becomes \[ \sigma^*_2 \in \mathop{\mathrm{argmin}}_{\sigma_2 \in \Sigma_2} \max_{s_1 \in S_1} u_1(s_1, \sigma_2), \tag{4.2}\]

where again \(s_1 \in S_1\) can be swapped for \(\sigma_1 \in \Sigma_1\). Note the same payoff function for both players in (4.1) and (4.2)

Theorem 4.6 (von Neumann) Assume a two-player zero-sum game. Then \[ \max_{\sigma_1 \in \Sigma_1} \min_{s_2 \in S_2} u_1(\sigma_1, s_2) = \min_{\sigma_2 \in \Sigma_2} \max_{s_1 \in S_1} u_1(s_1, \sigma_2). \] Moreover, \(\boldsymbol{\sigma}^* = (\sigma_1^*, \sigma_2^*) \in \Sigma\) is a Nash equilibrium iff both \(\sigma_1^*\) and \(\sigma_2^*\) are maxmin.

The maxmin equality in Theorem 4.6 can be equivalently expressed as \[ \max_{\sigma_1 \in \Sigma_1} \min_{s_2 \in S_2} u_1(\sigma_1, s_2) = -\max_{\sigma_2 \in \Sigma_2} \min_{s_1 \in S_1} u_2(s_1, \sigma_2). \]

So to compute a Nash equilibrium it suffices to compute (arbitrary) maxmin strategies for both players.

4.8.2 Computing Nash Equilibria in Two-player Zero-sum Games

Assume \(S_1 = \left\{1, \dots, m_1\right\}, S_2 = \left\{1, \dots, m_2\right\}\). We want to compute \[ \sigma_1^* \in \mathop{\mathrm{argmax}}_{\sigma_1 \in \Sigma_1} \min_{l \in S_2} u_1(\sigma_1, l). \] Consider a linear program with variables \(\sigma_1(1), \dots, \sigma_1(m_1), v\): \[ \begin{aligned} &\max v &&\\ \text{s.t.}\;&\sum_{k = 1}^{m_1} \sigma_1 (k) u_1(k, l) \geq v, & &l = 1, \dots, m_2, \\ &\sum_{k = 1}^{m_1}\sigma_1(k) = 1, && \\ &\sigma_1(k) \geq 0, & &k = 1 \dots, m_1. \end{aligned} \tag{4.3}\]

Lemma 4.3 For a mixed strategy \(\sigma^*_1\) it holds that \(\sigma_1^* \in \mathop{\mathrm{argmax}}_{\sigma_1 \in \Sigma_1} \min_{l \in S_2} u_1(\sigma_1, l)\) if and only if assigning \(\sigma_1(k) := \sigma_1^*(k)\) and \(v := \min_{l \in S_2} u_1(\sigma_1^*, l)\) gives an optimal solution of the linear program (4.3).

4.9 Summary and Results

As a summary:

- We have reduced the computation of Nash equilibria to the computation of maxmin strategies for both players;

- Maxmin strategies can be computed using linear programming in polynomial time;

- That is, Nash equilibria in zero-sum two-player games can be computed in polynomial time.

We have considered static games of complete information, i.e., “one-shot” games where the players know exactly what game they are playing. We modeled such games using strategic-form games. We have considered both pure strategy setting and mixed strategy setting. In both cases, we considered four solution concepts:

- Strictly dominant strategies;

- Iterative elimination of strictly dominated strategies;

- Rationalizability (i.e., iterative elimination of strategies that are never best responses);

- Nash equilibria.

In pure strategy setting:

- Strictly dominant strategy equilibrium survives IESDS, rationalizability and is the unique Nash equilibrium (if it exists);

- In finite games, rationalizable equilibria survive IESDS, and IESDS preserves the set of Nash equilibria;

- In finite games, rationalizability preserves Nash equilibria.

In mixed setting on the other hand:

- In finite two-player games, IESDS and rationalizability coincide;

- Strictly dominant strategy equilibrium survives IESDS (rationalizability) and is the unique Nash equilibrium (if it exists);

- In finite games, IESDS (rationalizability) preserves Nash equilibria.

The proofs for 2. and 3. in the mixed setting are similar to the corresponding proofs in the pure setting.

Again, using the expected value as we did gives us weird results like IESDS and rationalizability being the same. Strictly dominant strategy equilibria coincide in pure and mixed settings and can be computed in polynomial time. IESDS and rationalizability can be implemented in polynomial time in the pure setting as well as in the mixed setting. In the mixed setting, linear programming is needed to implement one step of IESDS (rationalizability). Nash equilibria can be computed for two-player games

- in polynomial time for zero-sum games (using von Neumann’s Theorem 4.6 and linear programming);

- in exponential time using support enumeration;

- in PPAD using Lemke-Howson (omitted).

4.10 Modes of domination

To simplify, let us consider only pure strategies. Recall that for \(s_i, s_i' \in S_i\) a strategy \(s_i'\) is strictly dominated if \(u_i(s_i, \boldsymbol{s}_{-i}) > u_i(s_i', \boldsymbol{s}_{-i})\) for all \(\boldsymbol{s}_{-i}\in S_{-i}\).

Let \(s_i, s_i' \in S_i\). Then \(s_i'\) is weakly dominated by \(s_i\) if \(u_i(s_i, \boldsymbol{s}_{-i}) \geq u_i(s_i', \boldsymbol{s}_{-i})\) for all \(\boldsymbol{s}_{-i}\in S_{-i}\) and there is \(\boldsymbol{s}_{-i}' \in S_{-i}\) such that \(u_i(s_i, \boldsymbol{s}_{-i}') > u_i(s_i', \boldsymbol{s}_{-i}')\).

Let \(s_i, s_i' \in S_i\). Then \(s_i'\) is very weakly dominated by \(s_i\) if \(u_i(s_i, \boldsymbol{s}_{-i}) \geq u_i(s_i', \boldsymbol{s}_{-i})\) for all \(\boldsymbol{s}_{-i}\in S_{-i}\).

Then we say that a strategy is (strictly, weakly, very weakly) dominant if it (strictly, weakly, very weakly) dominates any other strategy.

Theorem 4.7 Any pure strategy profile \(\boldsymbol{s} \in S\) such that \(s_i\) is very weakly dominant is a Nash equilibrium.

The same claim can be also proven in the mixed strategy setting.